The developer platform team is tasked with empowering third-party developers and giving them the ability to build new products on top of Webflow.

For members of that team — including myself — the goal is to build robust and reliable systems that developers can depend on to successfully serve data to and from external applications that may be in use across hundreds of thousands of websites.

A key method of communication between platforms and third-party applications includes the usage of webhooks to maintain near real-time data synchronization across systems. For large content management systems, webhook events can contain large unbounded amounts of data that can be difficult to propagate due their size.

Below, read more about how our team overcame the SQS client’s payload constraints by extending its interface to process and deliver sizable events for our webhook domain needs.

The SQS client payload limitation

AWS’s Message Queueing Service (SQS) powers many domains and services that exist within Webflow’s backend. To interface with SQS, we use Amazon’s SDK client which has a maximum payload size limitation of 256 kilobytes per message. Given these constraints, our team was quickly blocked from delivering large webhook data events whose content was packaged and enqueued in a SQS message intended to be processed downstream by a webhook queue worker.

Some of our engineering teams had previously circumvented the SQS client limitation with custom-built solutions that included storing pointers to resources in the message contents to be queried from a datastore at a later time. However, given that webhooks are a snapshot of an event that might only exist within a given period of time, it was important for our team to find a better way to store the event specifics to ensure the data integrity of the webhook event remained intact.

Data storage considerations

When evaluating datastores to store large message contents, we looked at three options already in use in our environment: S3, Redis, and MongoDB. Storage size was the main thing we were looking to solve, and S3 was a clear winner in comparison to the other options when it came to having a large storage capacity for cardinal data.

Other deciding factors for our team included data durability, scalability, and cost concerns — all areas where S3 shined. We were less concerned with datastores with high throughput or low latency which was the strength of another one of our options, Redis. Additionally, utilizing an Amazon managed service like S3 meant we did not have to worry about manually managing the datastore’s infrastructure specifics to maintain consistency, availability, and data partitioning.

Solution approach and implementation

Our approach to storing large message payloads in S3 is not a novel idea, as Amazon open-sources extended clients in both Python and Java to achieve the same functionality. However, Amazon did not have a library offering that matched our tech stack language, so we opted for writing an in-house solution.

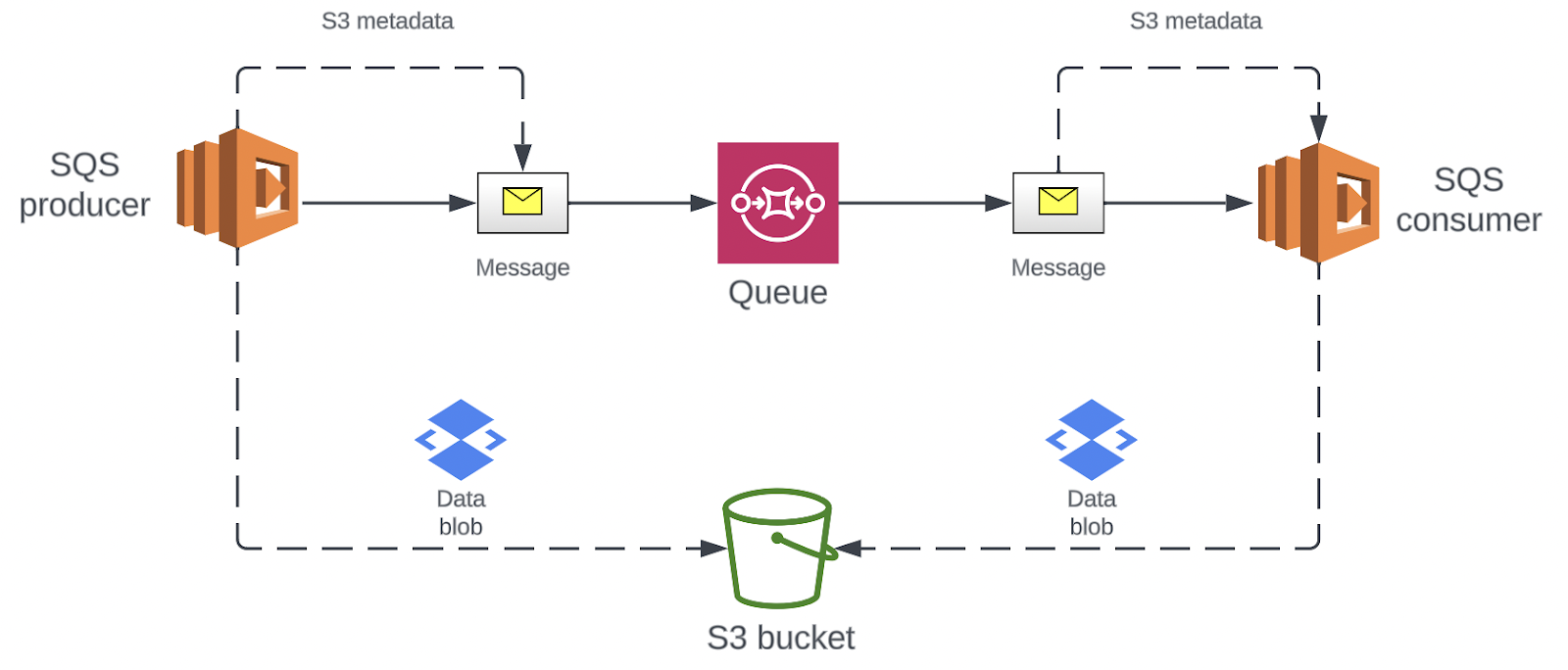

To get started, we extended our batch SQS client interface to store a message’s payload in S3 if and only if it exceeded the size of 256KiB. We also updated large messages’ headers with a reserved keyword key-value pair whose value was a pointer to the location of the message data in S3. This made it so that when any message is read off the queue, the client first checks if this key exists in the header to determine whether the message payload must be pulled from S3. Additionally, all of this implementation was abstracted away so that no SQS message producer or consumer across all of our products had any inclination to the data storage specifics.

To offload the deletion of S3 entries for large SQS payload messages, we configured a S3 lifecycle policy to automatically handle deletions of messages whose lifespan exceeded the SQS message retention period. By doing so, we simplified the client’s implementation by reducing a roundtrip network call to S3 when successfully reading and processing a message. This resulted in improved performance and removed S3 retry handling when dequeuing a message as compared to if the client had to perform S3 deletions.

Some initial success metrics that we collected after doing a phased rollout of the extended client included successfully delivering over 13,500 large payload webhooks with a 100% success delivery rate. What’s even more notable is that we delivered a solution to all of our application queues and jobs that prevents future engineering bandwidth costs to be invested to overcome the SQS client’s payload limitations.

Our ongoing commitment to optimizing our technical infrastructure

After releasing an initial solution to support large message payload sizes within our queueing system, future optimizations remain and have been roadmapped to improve the implementation. For example, data compression and decompression of SQS message payloads is not ubiquitously performed in the queuing process. Certain data compression algorithms can have a compression ratio as high as 5:1 which can improve network latency performance, reduce S3 storage costs for stored documents, and decrease the number of messages stored in S3 since raw data payload sizes can be greater than 256 kilobytes.

Enhancements like this are part of the dedicated effort that Webflow embarks on to provide dependable infrastructure that third-party developers can build on, and we’re excited to continue uncovering additional opportunities that allow us to ship the best possible products and solutions.

If you’re interested in joining our growing product and engineering organization, check out our careers page to learn more about Webflow and explore our open roles.