Our view at Stack - Simplify web development with Webflow, reduce costs, and deliver professional results. No-code, responsive, and SEO-friendly. Explore your creative potential!

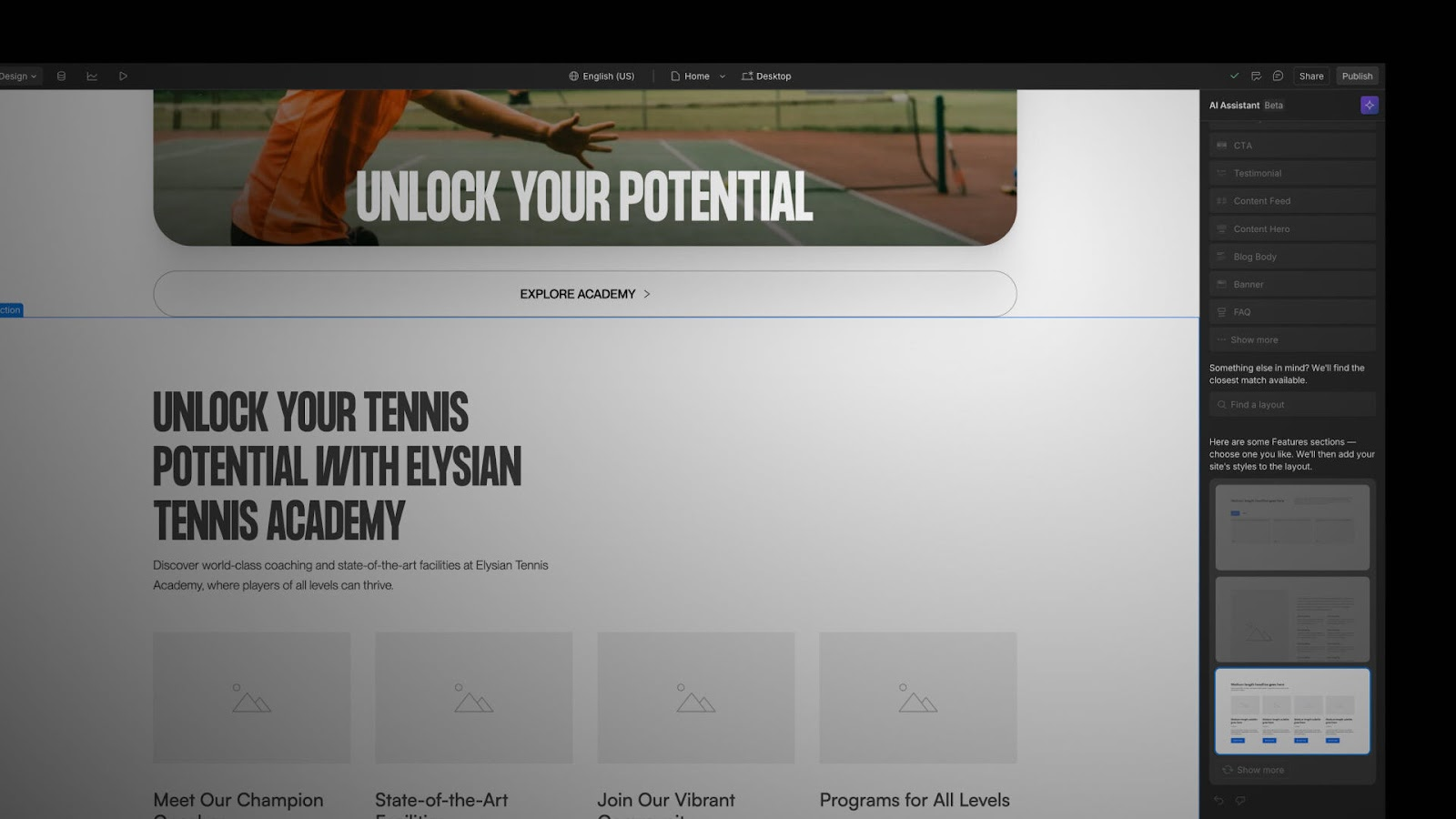

AI Assistant will eventually have all sorts of “skills,” but today its main skill is creating new sections in an existing Webflow site. It lives in the right side of the Designer and looks like this:

I’m writing this post after our public beta launch at Webflow Conf 2024. It felt like a good time to reflect on the ups and downs of developing an AI feature at Webflow.

The AI Assistant launch was great!

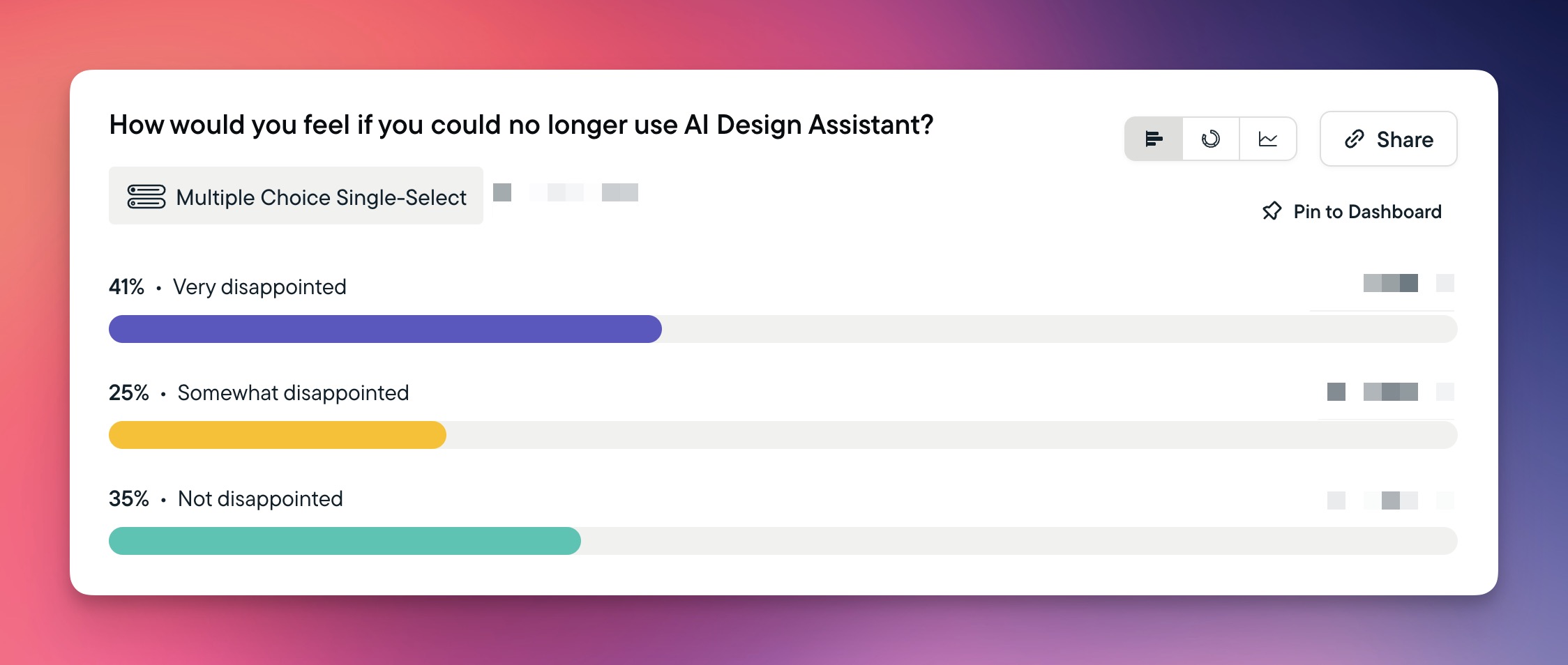

This post spends a lot of time exploring the challenges we faced while developing AI Assistant, but – spoiler alert – I want to say up front that this story has a happy ending. The AI Assistant launch went off without a hitch and our post-launch product-market fit score (41%) surpassed all expectations.

Pulling this off required herculean efforts from the newly-formed Applied AI team. I’m forced to use the unspeakably cringey “flying the plane while we’re building it” metaphor — this team had to figure out so many new things about AI product development while at the same time tackling an extremely ambitious AI product.

As a result of this team’s efforts we’ve launched the AI Assistant and its first “skill,” section generation. In addition, we’ve accumulated the AI-specific infrastructure, processes, and institutional knowledge that will accelerate the addition of many more AI Assistant skills in the near future. A smashing success all around!

But we got sucked into many “AI traps”

Although it had a happy ending, the AI Assistant story also had its dramatic setbacks. And while all software projects have setbacks, I couldn’t shake the feeling that this project had unique setbacks — things that seemed specific to AI projects. The typical advice around software development, especially around agile software development, seemed so much harder to apply to this project.

I kept coming back to four “AI traps” that seemed to constantly nudge this project away from quick, iterative, experimental development toward large, risky, waterfall-style development. And while I think there were other factors that were outside our control (e.g., we simply couldn’t have built today’s AI Assistant using 2023’s state-of-the-art models) — these traps explain a good chunk of our AI Assistant delays.

Trap 1: Overestimating current AI abilities

It’s very tempting to assume that we’re about five minutes away from an AI apocalypse. Every morning I wake up to a deluge of Slacks/emails/Tweets announcing new state-of-the-art models, trailblazing AI tools, and magical demos. Even the AI detractors are usually in the “AI is too powerful” camp.

While the detractors might be right in the long term, right now AI is often very stupid. For example, GPT-4o still thinks that white text is readable on white backgrounds:

“The .paragraph class provides a white color for the paragraph text within the cards, ensuring readability against the white background of the cards.”

— Real GPT-4o response

This tendency to overestimate the current technology slips into Webflow’s roadmap planning. At many points during AI Assistant’s development we said stuff like:

- “…the quality scorecard needs to be 70% green before the April private beta launch…”

- “…let’s just train a model on [insert hideously complex domain here]…”

- “…it’ll take us around 5 engineer-weeks to teach the AI Assistant to apply existing styles…”

All of these claims had some supporting evidence, like demos, proof of concepts (PoCs), or maybe just saved ChatGPT conversations. But in every case we assumed that if we caught a whiff of a successful output from a large language model (LLM), we could eventually coax that same LLM to produce perfect outputs for every edge case.

It turned out we were extremely wrong about all of the assumptions above. It was way more challenging than we thought to improve the scorecard. Training models is slow, expensive, and difficult to do for broad use cases. And we’re still trying to improve how the AI Assistant applies site styles.

This tendency to overestimate AI abilities meant that our agile-looking roadmap with bite-sized goals — e.g. ”we’ll ship AI-generated gradients next week to see if users like it” — turned into a months-long slog to produce features that we weren’t sure our users even wanted.

Trap 2: Demo enthusiasm

This is the trap I fell into the most often, because I love demos.

And — to be clear — I think demos are a crucial part of developing AI features. But it’s far too easy to see a demo of “working code” and think, “This feature is basically launched! We just need to add some University articles, update some copy, and bing bang boom let’s SHIP IT!”

If Webflow is of interest and you'd like more information, please do make contact or take a look in more detail here.

Credit: Original article published here.